Artificial Neural Networks

Artificial neural networks (ANNs) are computational models inspired by the structure and function of the human brain. They are designed to recognize patterns and make predictions from complex data, mimicking the way biological neurons process information.

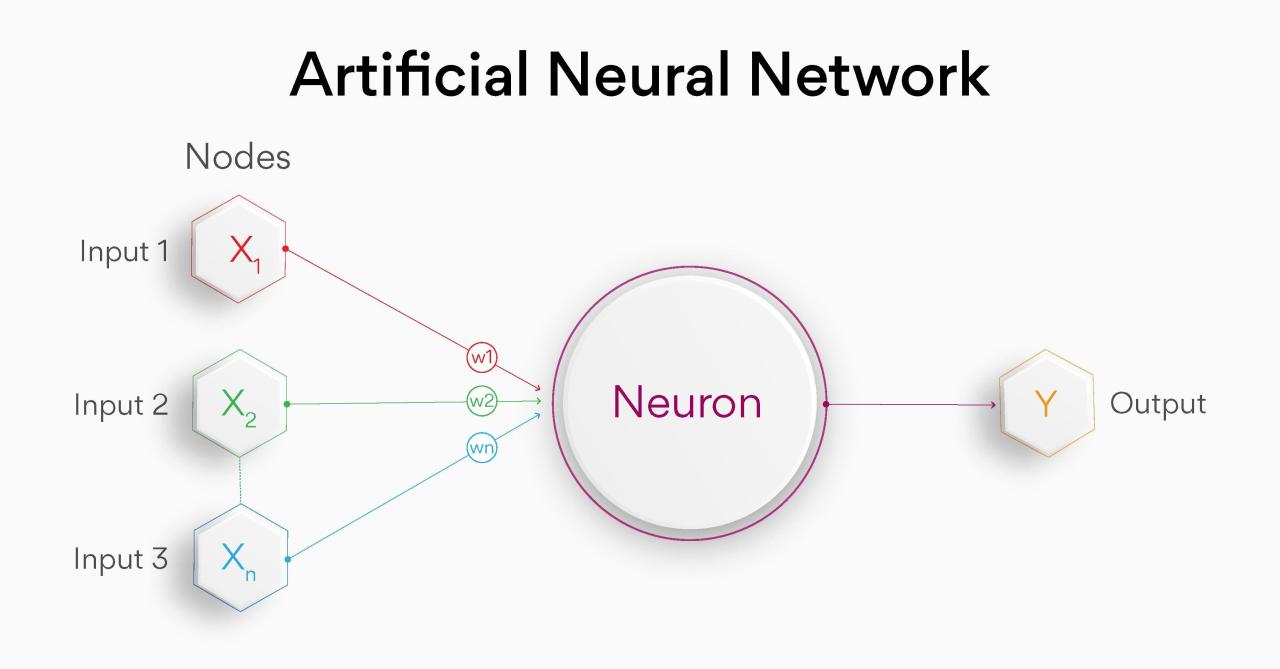

ANNs consist of layers of interconnected nodes, or “neurons,” each of which receives inputs, processes them, and produces an output. The connections between neurons have weights that determine the strength of the signal transmitted. As the network processes data, these weights are adjusted through a process called “learning” to optimize the network’s performance.

Types of Artificial Neural Networks

There are various types of ANNs, each with its strengths and applications:

- Feedforward Neural Networks:These networks have a single direction of data flow, from input to output layers, without any loops or feedback connections.

- Recurrent Neural Networks (RNNs):RNNs introduce feedback connections, allowing them to process sequential data and capture temporal dependencies.

- Convolutional Neural Networks (CNNs):CNNs are designed to process data with grid-like structures, such as images, and extract features using convolution operations.

li> Generative Adversarial Networks (GANs):GANs consist of two competing networks, a generator and a discriminator, that learn to generate new data that resembles the original dataset.

Applications of Artificial Neural Networks

ANNs have a wide range of applications across various industries:

- Image Recognition and Processing:CNNs are used for object detection, facial recognition, and image segmentation.

- Natural Language Processing:RNNs and transformers are used for machine translation, text classification, and sentiment analysis.

- Speech Recognition:ANNs are used to transcribe speech and improve speech recognition accuracy.

- Predictive Analytics:ANNs can forecast future events, such as stock prices or weather patterns, by learning from historical data.

Architecture of Artificial Neural Networks

Artificial Neural Networks (ANNs) consist of layers, each containing interconnected neurons. Neurons receive input, apply a weighted sum, and pass the output through an activation function. Weights adjust during training to optimize network performance.

Layers of an ANN

ANNs have three main layers: input, hidden, and output. The input layer receives raw data, the hidden layer processes the data, and the output layer generates the network’s prediction.

Neurons and Weights

Neurons are the basic processing units of ANNs. They receive input data, apply weights to each input, sum the weighted inputs, and pass the result through an activation function. Weights determine the influence of each input on the neuron’s output.

Activation Functions

Activation functions introduce non-linearity into ANNs, allowing them to model complex relationships. Common activation functions include:

- Sigmoid: Outputs a value between 0 and 1.

- Tanh: Similar to sigmoid, but outputs a value between -1 and 1.

- ReLU (Rectified Linear Unit): Outputs the input if it’s positive, otherwise outputs 0.

- Leaky ReLU: Similar to ReLU, but outputs a small negative value if the input is negative.

Training Artificial Neural Networks

Training artificial neural networks involves adjusting the weights and biases of the network to minimize the error between the network’s output and the desired output. This process is iterative and can be computationally expensive, especially for large networks with many parameters.

There are various training algorithms used for artificial neural networks, including:

- Backpropagation:A widely used algorithm that calculates the error gradient and updates the weights and biases in the direction that minimizes the error.

- Gradient descent:An iterative algorithm that updates the weights and biases in the direction of the negative gradient, gradually minimizing the error.

- Momentum:An extension of gradient descent that adds a momentum term to accelerate convergence.

- RMSProp:An adaptive learning rate algorithm that adjusts the learning rate for each parameter based on its recent gradients.

- Adam:A sophisticated adaptive learning rate algorithm that combines the benefits of RMSProp and momentum.

To evaluate the performance of artificial neural networks, various metrics can be used, including:

- Accuracy:The percentage of correct predictions made by the network.

- Precision:The percentage of positive predictions that are actually correct.

- Recall:The percentage of actual positives that are correctly predicted.

- F1 score:A harmonic mean of precision and recall, providing a balanced measure of performance.

- Mean squared error (MSE):The average of the squared differences between the network’s output and the desired output.

- Root mean squared error (RMSE):The square root of the MSE, providing a measure of the standard deviation of the errors.